Neural network activation functions influence neural network behavior in that they determine the fire or non-fire of neurons. ReLu is the one that is most commonly used currently. The visualizations below will help us to understand them more intuitively and there are code samples so that you can run it on your machine.

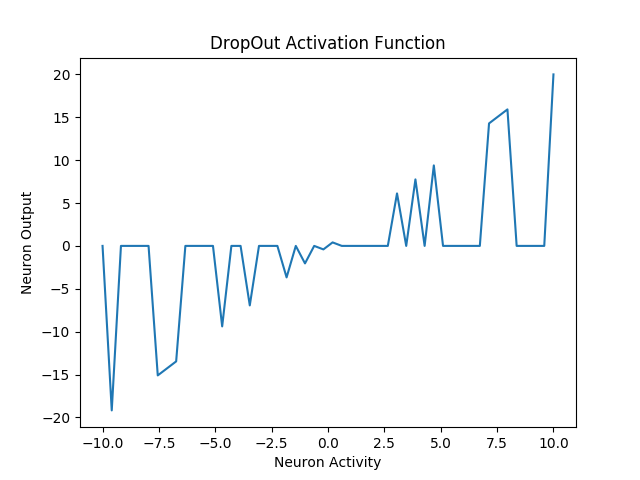

Dropout

1 | #Tensorflow 1.x |

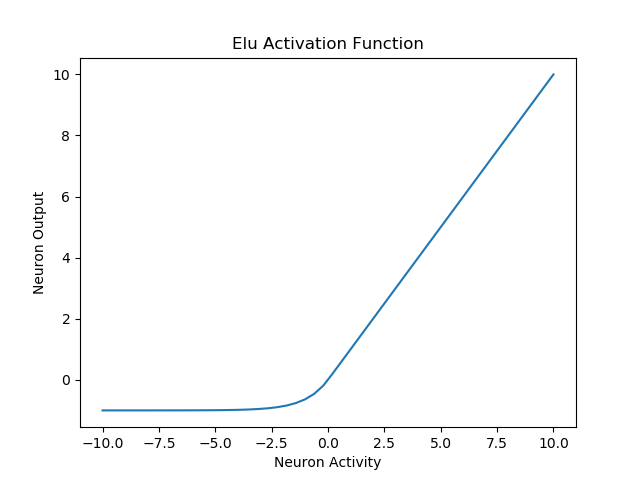

Elu

1 | #Tensorflow 1.x |

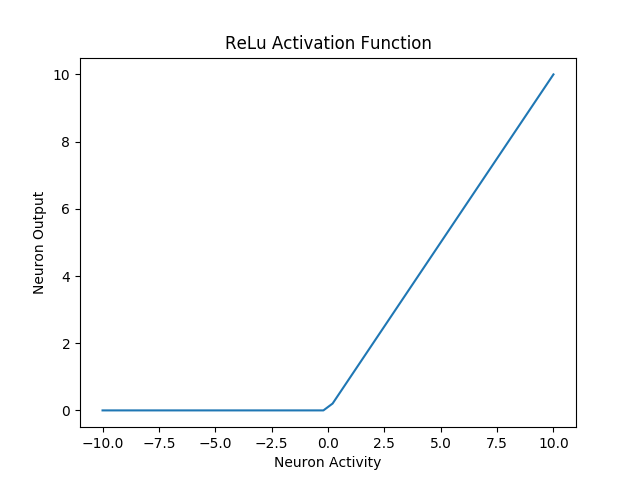

ReLu

1 | #Tensorflow 1.x |

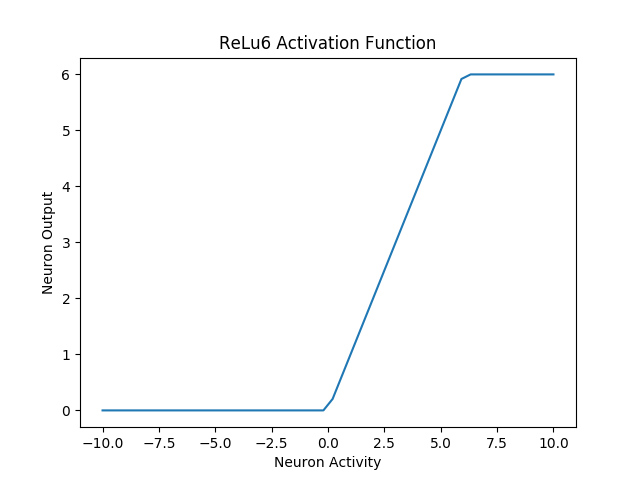

ReLu6

1 | #Tensorflow 1.x |

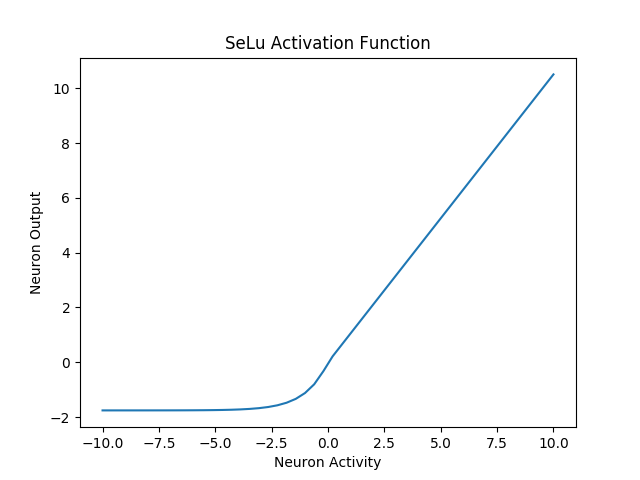

SeLu

1 | #Tensorflow 1.x |

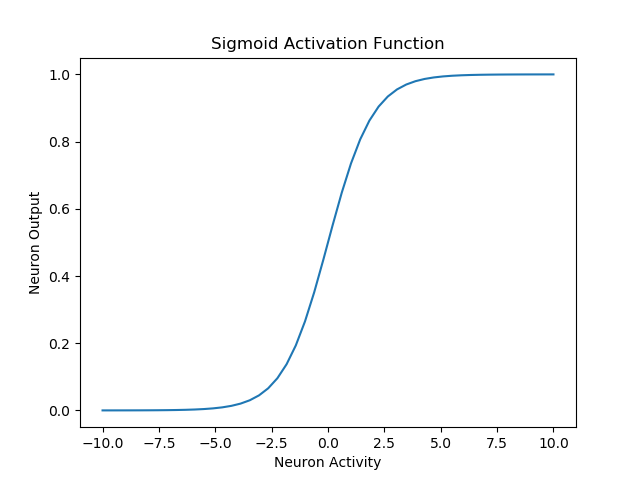

Sigmoid

1 | #Tensorflow 1.x |

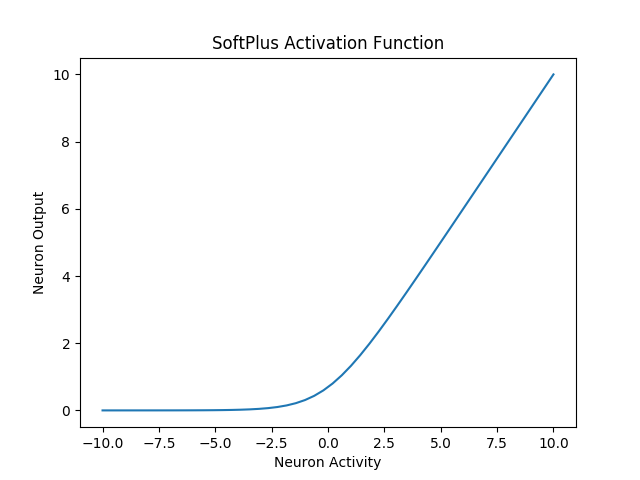

SoftPlus

1 | #Tensorflow 1.x |

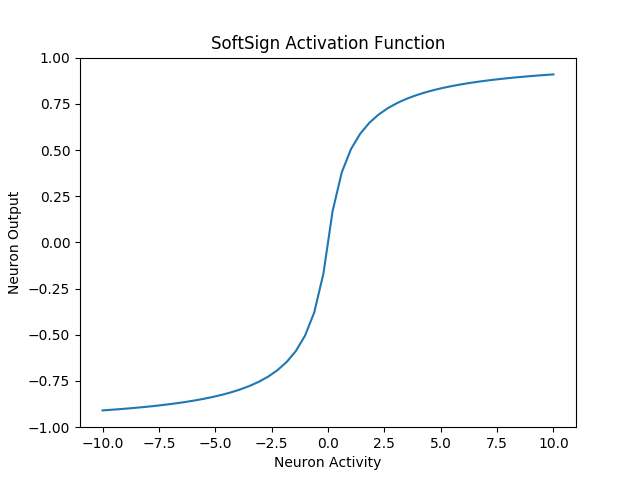

SoftSign

1 | #Tensorflow 1.x |

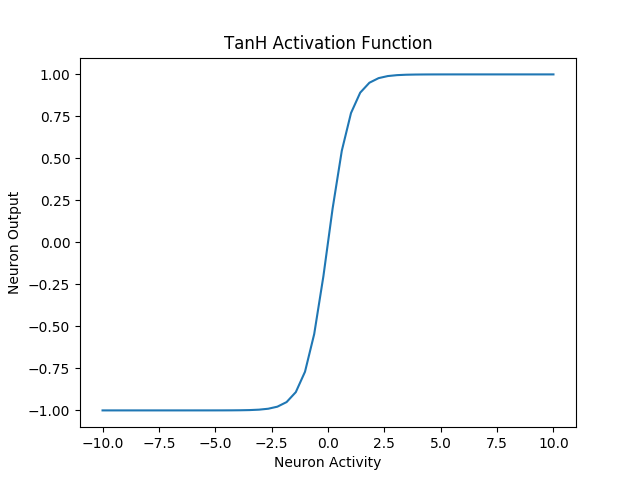

TanH

1 | #Tensorflow 1.x |